Building new features and functionality has never been easier in software development. I would like to share about a recent project I created, leveraging the power of Azure’s products such as Logic Apps, Azure Tables, and the Azure Storage JavaScript SDK. Specifically, I would like to share about how incredible my experience was building my application. Also, shout out to Microsoft Developers who have fantastic comments/examples in their NPM Modules.

The project was to create an application that acted as a social media data aggregator that uses authenticated HTTP requests against Meta’s API for accessing the Instagram Basic Display. This applications function is to run twice daily and insert/merge new changes of a specified Instagram account to a fast data source that can be queried and sorted. After spending hours googling and looking for a solution, I felt defeated, as the knowledge and resources online are very limited for this type of project. I am writing this article in hopes it saves someone some time and effort. I only found “Paid” SaaS offerings costing $25-$125/Month! Save some money with my architecture! Let’s dive in:

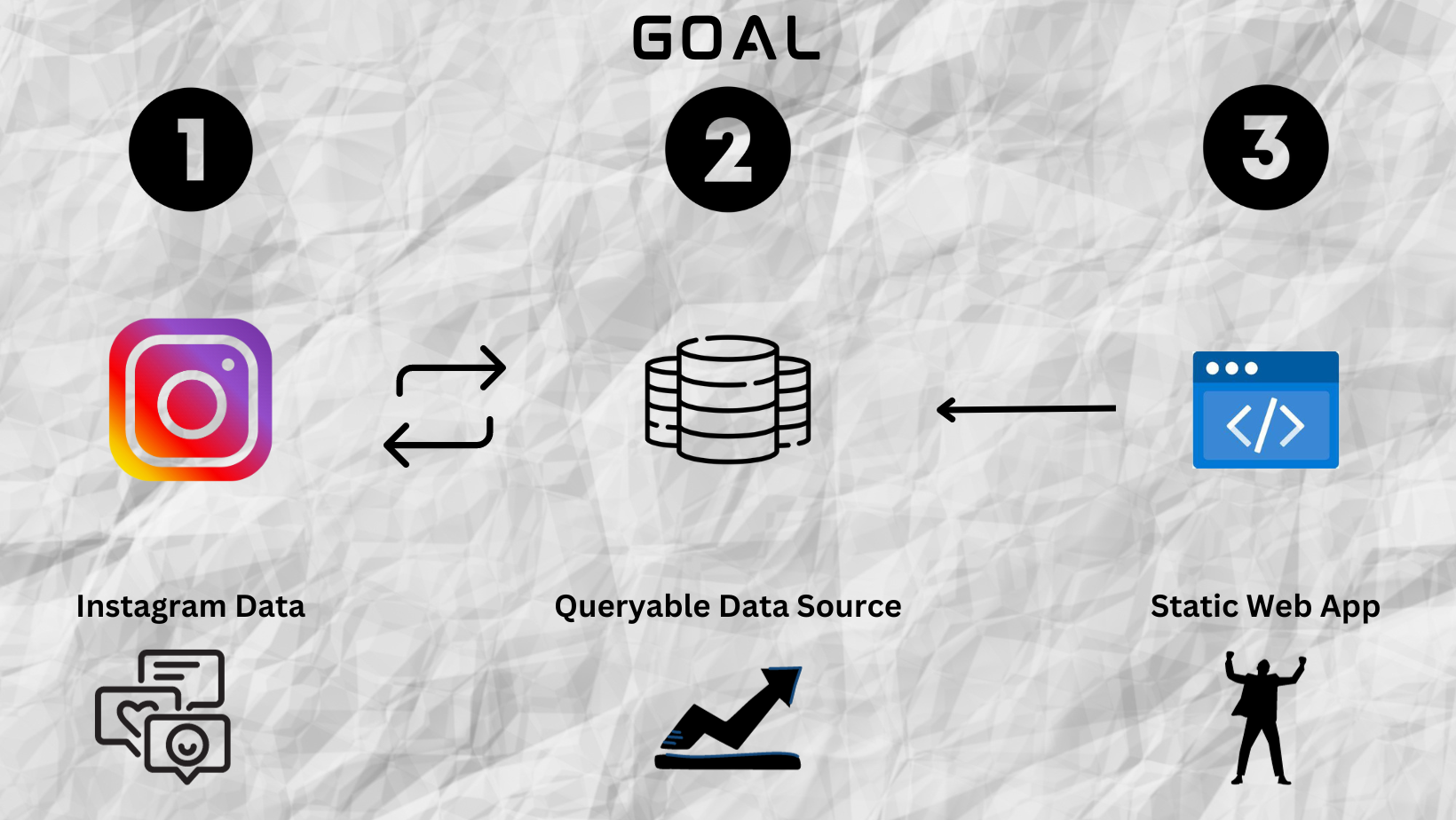

Project Goal:

Create an Instagram Data Aggregator using Azure and the Meta API that can be called and sorted without data sprawl.

First, I created a Meta Developer account and added the “Instagram Basic Display” platform in the Meta Developer portal. Next, I had to verify some information for my developer account to be able to access the API’s data. After this step, I was able to send an “Instagram Tester” request/invitation to the Instagram account I was looking to pull the data from.

Meta Developer Portal: https://developers.facebook.com/

After the account approved the “application” found in Account Settings “Websites and Apps”, I was able to hit the data from the API using Postman. After verifying I was getting the proper data from Postman, I moved on to creating a storage account in Azure so I could use Azure Tables to store the data and have fast load times of the data when called via the Azure Tables REST API. I created and named my table “InstagramPosts”.

Next, I created a consumption based Logic App with a daily reoccurrence. The next action was forming my HTTP Request with the proper authorization headers I had created for my Postman test.

Azure Logic App Documentation: https://learn.microsoft.com/en-us/azure/logic-apps/

After verifying with the trigger, I was able to get the Output from the Meta API in Logic Apps, I moved on to adding actions to Parse the JSON from the API and then used the Merge/Insert Azure Tables action to store any new data that didn’t have a matching PostID in my Azure Tables storage account.

Azure Tables Logic App Connector Documentation: https://learn.microsoft.com/en-us/connectors/azuretables/

import { TableServiceClient, TableClient } from "@azure/data-tables";

export async function queryTables() {

const accountConnectionString =

"DefaultEndpointsProtocol=https;AccountName=<name of account>;AccountKey=<account key>;EndpointSuffix=core.windows.net";

const serviceClient = TableServiceClient.fromConnectionString(

accountConnectionString

);

const tableClient = TableClient.fromConnectionString(accountConnectionString);

const tableName = `<tablename>`;

const entities = tableClient.listEntitiesAll(tableName);

// this loop will get all the entities from all the pages

// returned by the service

let entitiyArray = []

for await (const entity of entities) {

entitiyArray.push(entity)

}

const sortedEntities = entitiyArray.sort((a,b) => new Date(a.timestamp) - new Date(b.timestamp)).reverse();

return [

sortedEntities[0],

sortedEntities[1],

sortedEntities[2],

sortedEntities[3],

sortedEntities[4],

sortedEntities[5],

]

}From here I was able to call the Azure Tables API at build in my application and build a new scheduled GitHub Action called “NightlyBuild.yaml” to schedule a daily build of my Azure Static Web App that consumes my new Azure Tables API at build to reduce api calls.

name: Nightly Build

on:

schedule:

- cron: '0 3 * * *'

jobs:

build_and_deploy:

runs-on: ubuntu-latest

name: Build and Deploy Job

steps:

- uses: actions/checkout@v3

with:

submodules: true

lfs: false

- name: Build And Deploy

id: builddeploy

uses: Azure/static-web-apps-deploy@v1

with:

azure_static_web_apps_api_token: ${{ secrets.AZURE_STATIC_WEB_APPS_API_TOKEN }}

repo_token: ${{ secrets.GITHUB_TOKEN }} # Used for Github integrations (i.e. PR comments)

action: "upload"

###### Repository/Build Configurations - These values can be configured to match your app requirements. ######

# For more information regarding Static Web App workflow configurations, please visit: https://aka.ms/swaworkflowconfig

app_location: "/" # App source code path

api_location: "" # Api source code path - optional

output_location: "dist" # Built app content directory - optional

###### End of Repository/Build Configurations ######Things I Added for Production:

• Logic Apps Failure Alerting

• Added Failure Alerting on GitHub Actions TwiceDailyBuild.yaml

Project Outcome:

Created an Instagram Data Aggregator using Azure Logic Apps and Azure Tables that queries the Meta API that can be called and sorted on demand using the Azure Storage Javascript SDK.

I hope you enjoyed this quick view into how quickly solutions can be created with Azure! This solution took less than a day to architect, test, implement, setup monitoring/alerting, and secure. Try doing that without the cloud in a day phew!

Are you stuck in Azure? Are you looking for help developing systems that follow the five pillars of architectural excellence: Reliability, Security, Cost Optimization, Operational Excellence, and Performance Efficiency?

Then look no further. Reach out to me here on my blog or via a LinkedIn message. I can connect you with industry leading experts that can solve your problems with incredible solutions that are right sized for your business, budget, and timescale.